Bad OSINT between bad AI bad readers

If OSINT analysts are producing bad OSINT, is it because of bad AI or because the readers do not care?

An excellent note from dutch_osintguy - Nico Dekens rings the alarm.

OSINT analysts rely too much and wrongly on AI. The critical thinking is endangered, and the quality of OSINT investigations and studies is at risk.

He is fully right but I think this does not come from AI.

What we have is a readership crisis.

Readers want “OSINT”. They do not want to bother reading 20-page study with traceable and reproducible evidence.

They want the conclusion, the executive summary, the 280-sign tweet (or 300-sign Bluesky) or the LinkedIn post. They don’t want to read OSINT they want to share OSINT conclusions. To have this magical label that will automatically buy trust to the public and looks knowledgeable, because of the “INT”. This mythical “intelligence” thing that has the sexy jamesbondian tone, the rewarding “I’m into an elite circle that knows things”, and the synonym of “clever / smart”: Intelligence.

Let’s face it: many OSINT consumer just want the conclusions, they will not try to check the traceability or reproducibility of the evidence, let alone try to reproduce or trace it themselves.

“An OSINT Study says that…” like a magical Open Sesame that will automatically stamp the rest of the sentence with “truth” and “ethical”.

But if our readers do not bother double check, assess the reproducibility, check the traceability, why would we bother to write all this stuff? Let AI do it, the reader won’t care anyway. Readers want “OSINT says this is an apple”, they won’t read, nor would they care for the full report explaining “this is how we proved it was not a banana; an orange would not grow that time of the year, it could have been a pear but the shape of the shadow was not right, avocado would not grow in the same area” etc.

dutch_osintguy gives us different nightmare scenario already happening showing how bad things are with AI badly used for OSINT:

Such scenario is normally impossible. Normally, a study producing such evidence would not hold for three minutes in public before someone random double checks and sees the mistake. And any analyst knowing that he would have to face this level of readership, would double check the location even if he or she trusted AI in the first place, just to not look fool in front of everyone.

This scenario can only happen if the analysts trust his readership to not investigate the reproducibility of its studies. It normally can’t happen if the study is made public and the reader community is doing its job of properly reading the OSINT, and scream when reading a sentence like “AI told me it was Paris”.

Is it OSINT? Is it more than collection? If it is an “INT” and follows the intelligence cycle, the first step is “requirement”. In other words, asking yourself the question: what exactly am I researching in investigating a profile and why?

If the AI tells you the profile is an “activist” and you did not check what type of activism, you are a bad reader.

Intelligence is a tool to help policymaker make decisions. But if a policymaker trusts the “activist” thing without even investigating what type of activism, then the problem is about policymakers who do not know politics.

Now this scenario is actually not that simple. Far right plays on what is known as “metapolitics” so doing politics but hiding it. AI are very bad at detecting metapolitics, and they are sometime very tricky even for humans. For instance, the profile would advertise activism like “feeding the poor”. Then you would realise by investigating that the target’s activity was feeding “white poor people only” and distributing pork soup.

This tricky one, you would detect as far right activism only if you have an anti-far right angle. In other words: you need a clear policy, a reason for investigation. OSINT will detect far right or not far right if this is why you used OSINT in the first place.

Here again we have the problem of people treating OSINT as a magical tool that will automatically give everything. Like throwing a hammer into the air and expecting it will magically be finding the nails. “OSINT me this guy”, this is the kind of thing you can hear without AI involved and that would return the very same kind of bad results.

OSINT is time consuming and will face the overabundance of data. So, you must integrate all the intelligence cycle into the collection process.

What am I looking for?

How can I find it?

How much time does it take to translate or process this piece and is it worth it?

Will my reader be able to trace back how I found this?

You ask yourself all these questions while collecting and these questions are shaping and shifting your collection every step of the way. AI gives the illusion that all of this can be skipped. Again, for a lazy reader that will not bother to check if you asked yourself these questions and if your collection is the result of this process. If your client buying your jewellery tells you he does not care if it is real gold or not, you might as well sell him sand.

dutch_osintguy puts it this way:

“And here’s the kicker: in each case, the analyst didn’t fail because of bad intent or laziness. They failed because the tools were just good enough to feel trustworthy and just wrong enough to be dangerous.”

We have an OSINT management problem. Or more realistically we have a management problem with OSINT. OSINT does not work top down “do me some OSINT” and bottom up “Bullet point conclusions”. It is a horizontal process where the reader, the consumer is a full part of the process.

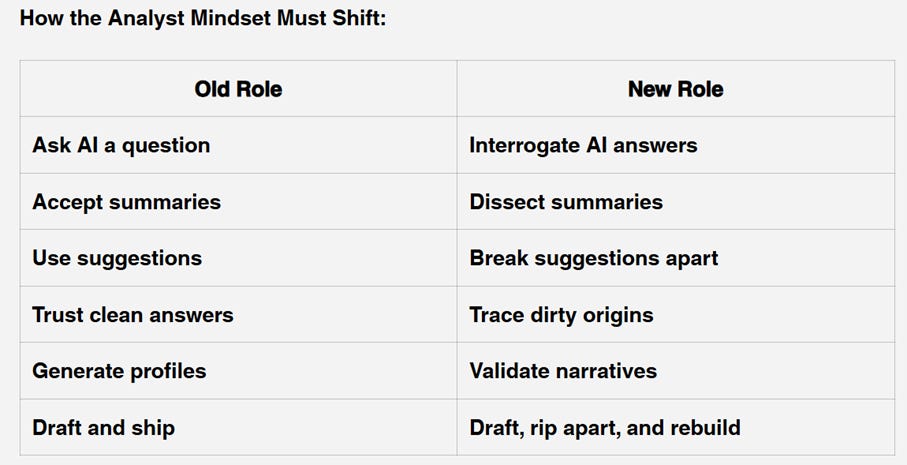

When analysts become dependent on outputs instead of building their own reasoning they lose what makes OSINT powerful: the ability to interpret, interrogate, and pivot.

To paraphrase this excellent point: when the reader becomes dependent on outputs instead of understanding the reproductible reasoning he loses what makes the OSINT so powerful: an intelligence product helping the decision making.

What happens when the boss is totally blind? When the leader does not question any conclusion brought to him? When the policy maker thinks that the label OSINT is self-sufficient, and there is no need to even read the thing? If you know you boss is not going to care one bit about metadata, timestamps or street sign, and is going to go straight to the conclusion written in bold “LOCATION”, why bother including all that in you report? Why bother double checking them?

We are only using AI in OSINT as our bad managers are using OSINT: I want the answer, I don’t care how it was obtained.

Because of a certain readership developed these last years that do not double check, do not assess the reproducibility, do not care for raw data, simply do not click on the link, they don’t deserve anything more than AI OSINT.

If an AI gives me an answer to a question, I would require to be provided with the raw source and the reasoning, and I would ask myself if someone else asking the same question would be given the same answer and reasoning, and I would try to understand why it is not the case and I would then try to figure out what in the source code makes the difference, then I would try to access the source code, and to see if there is any training available or free “AI coding tutorial” on the internet… And I would be now very deep in what OSINT people know well: a Rabbit Hole.

Reader can’t escape the rabbit hole phenomenon. They must confront it, and them too, to learn to deal with it by refocusing the purpose of their reading, clearly defining what they are looking for and asking themselves the questions we mentioned above.

Because our OSINT reader does not do their job, we have to turn ourselves into OSINT reader and do the job for them. What dutch_osintguy describes as “old role / new role” faced by OSINT analysts using AI incredibly looks like what a bad reader does and what a good reader should be doing

And when dutch_osintguy describe how OSINT analysts went the slippery slope with AI incredibly looks like how (bad) managers and lazy policymakers have been treated OSINT in the last years.

Only caring for summaries, accepting unchecked citations, giving up judgement “because it is OSINT = it is like Bellingcat = it is true”.

Too often we have the question “how can I do the difference between good OSINT and bad OSINT?” Every time we OSINT practitioners answer “well OSINT is reproductible process, this is what makes it trustable”. But policy makers do not want “trustable by reproduction” they want “100% certainty label”.

So, they started to treat OSINT just like that, a 100% certainty label.

And when it failed, they asked for the creation of a “good OSINT” label that would give them the holy grail of 100% certainty. “OSINT” said it = true; AI said it = true; CIA said it = true… But the true thing is that OSINT is not the magical thing that is going to solve the trust issues we have in intelligence service, in government, in the media. You know that retweeting a news article without reading it is bad. Sometimes you do it, but you know it is risky. The crisis we have now is that our reader would dream of being able to retweet OSINT without reading it and without any risk.

The truth is that the head of the CIA, when he or she reads a report, will not automatically send it to the president with the label “INTELLIGENCE” without reading it carefully, confront it with its vision, look for blind spot in the investigation, test some of the conclusions with other sources... And the president should not blindly say “oh it is labelled intelligence, so it is true, and this is the automatic decision that goes with it”. OSINT is not really made for Trump and Musk.

If the systems act like this, it all collapse. The intelligence cycle is exactly that: a cycle. Lazy policymakers, bad managers, incompetent bosses, it will rain down on the whole chain. And if you are not a responsible and demanding intelligence consumer, you can only get bad intelligence, OSINT or any other INT.

This crisis is very real and asking analysts to use AI responsibly is the best way to patch the problem.

But the real issue runs deeper: I am not saying we need better policymakers (yet) we need better OSINT consumers, levelling up to their responsibilities. Or OSINT analysts are just going to behave like irresponsible AIs…